3. Analytic continuation with the ana_cont library¶

There is no general-purpose main program of ana_cont. Therefore the user has to write a script according to his needs. This is very simple and some examples are presented in this notebook.

First we have to import some libraries.

[1]:

import sys, os

import numpy as np

import matplotlib.pyplot as plt

The ana_cont library can be imported directly, if it was installed properly. If not, or if there are multiple installations in your system, you should add the path to your favourite version:

[2]:

sys.path.insert(0, os.environ['HOME']+'/Programs/ana_cont')

import ana_cont.continuation as cont

3.1. Continuation of a fermionic Green’s function¶

A fermionic Green’s function \(G(z)\) has complex values, and its values on the Matsubara frequencies are related to the spectral function \(A(w)\) by

The same relation holds for the self-energy as well, if the Hartree term is subtracted [J.M.Luttinger, Phys. Rev. 121, 942 (1961)].

Note that the spectral function is normalized to 1 only for diagonal elements of Green’s functions. Diagonal elements of the self-energy are normalized to its first moment. Off-diagonal elements are normalized to 0. This simple example deals only with diagonal elements, off-diagonal elements are considered in a separate notebook.

Here we consider the continuation from fermionic Matsubara frequencies to real frequencies. It is of course also possible to continue from imaginary time to real frequencies, but this has two disadvantages: First, if data are obtained from CT-QMC, they need to be collected in imaginary-time bins, which reduces accuracy in steep regions. (The binned value does not in general coincide with the actual value at the bin center.) Fourier-transformed data can be obtained directly by NFFT, without any binning error. Second, the Matsubara-representation is more compact, meaning that much fewer Matsubara frequencies than imaginary-time bins are needed. This makes the linear-algebra operations cheaper and thus the continuation faster. For the sake of completeness let it be mentioned that the package continues \(G(-\tau)\) for positive values of \(\tau\), i.e. positive values.

3.1.1. Generate test data¶

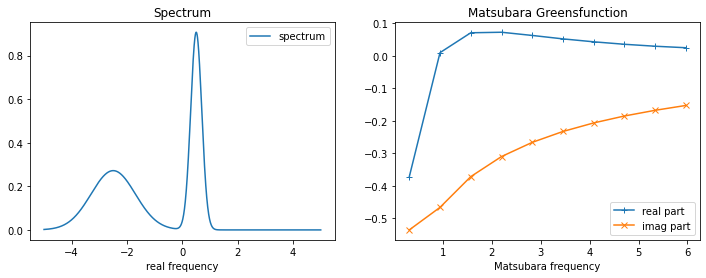

[3]:

w_real = np.linspace(-5., 5., num=2001, endpoint=True)

spec_real = np.exp(-(w_real-0.5)**2 / (2.*0.2**2))

spec_real += 0.3 * np.exp(-(w_real+2.5)**2 / (2.*0.8**2))

spec_real /= np.trapz(spec_real, w_real) # normalization

beta = 10.

wn = np.pi/beta * (2.*np.arange(10) + 1.)

noise_amplitude = 1e-4 # create gaussian noise

rng = np.random.RandomState(123)

noise_abs = rng.normal(0., noise_amplitude, wn.shape[0])

noise_phase = rng.uniform(0., 2.*np.pi, wn.shape[0])

noise = noise_abs * np.exp(1j * noise_phase)

kernel = 1./(1j*wn[:,None] - w_real[None,:])

gf_mats = np.trapz(kernel*spec_real[None,:], w_real, axis=1) + noise

fig,ax = plt.subplots(ncols=2, nrows=1, figsize=(12,4))

ax[0].plot(w_real, spec_real, label='spectrum')

ax[0].legend()

ax[0].set_xlabel('real frequency')

ax[0].set_title('Spectrum')

ax[1].plot(wn, gf_mats.real, label='real part', marker='+')

ax[1].plot(wn, gf_mats.imag, label='imag part', marker='x')

ax[1].legend()

ax[1].set_xlabel('Matsubara frequency')

ax[1].set_title('Matsubara Greensfunction')

plt.show()

3.1.2. Create the continuation problem¶

The first important part of the analytic continuation script is the creation of an AnalyticContinuationProblem object. If the analytic continuation is done by the Maximum entropy method, we need the following input: * Matsubara frequency grid (im_axis). Only positive Matsubara frequencies should be used for the continuation. The number of frequencies to be used depends on temperature, but one should use only the region that contains physical information, i.e. the low-frequency region.

The behaviour in the high-frequency region is trivial and encoded in the kernel. * Real frequency grid (re_axis). The real frequency grid must cover the whole bandwidth of the spectrum (ideally a bit more). It does not need to be equispaced. On the contrary, it is often of advantage to make it denser in regions, where the spectral function is expected to have a complicated structure. * Imaginary axis data (im_data). The Green’s function data belonging to the Matsubara frequencies given

above. If you continue a self-energy, make sure that you subtracted the Hartree term. You might also like to normalize it by its first moment. * Kind of the Green’s function (kernel_mode). If you want to continue a fermionic Green’s function or self energy from Matsubara frequencies, use kernel_mode='freq_fermionic'

[4]:

w = np.linspace(-5., 5., num=501, endpoint=True)

probl = cont.AnalyticContinuationProblem(im_axis=wn, re_axis=w,

im_data=gf_mats, kernel_mode='freq_fermionic')

3.1.3. Solve the continuation problem¶

method='maxent_svd'. This algorithm needs the following additional information: * Alpha determination algorithm. It is recommended to use alpha_determination='chi2kink', since the “classic” and “Bryan” algorithms in practice frequently lead to overfitting. * Error estimate: passed to the solver through the keyword stdev. It must have the same shape as im_axis.model. For a first try, use a flat default model. However, you can use an arbitrarily creative model. By experience a function that strongly decays in the high-frequency region can be useful. It is also possible to re-use a spectrum that was obtained from continuation with strong underfitting.[5]:

err = np.ones_like(wn)*noise_amplitude

model = np.ones_like(w)

model /= np.trapz(model, w)

sol,_ = probl.solve(method='maxent_svd', alpha_determination='chi2kink', optimizer='newton', stdev=err, model=model)

501 data points on real axis

20 data points on imaginary axis

19 significant singular values

Precomputation of coefficient matrices...

log10(alpha) = 9.00, chi2 = 2.517e+07, S = -6.597e-04, nfev = 13, norm = 1.019

log10(alpha) = 8.00, chi2 = 1.282e+07, S = -2.855e-02, nfev = 18, norm = 1.115

log10(alpha) = 7.00, chi2 = 2.701e+06, S = -1.925e-01, nfev = 20, norm = 1.177

log10(alpha) = 6.00, chi2 = 2.082e+05, S = -5.159e-01, nfev = 43, norm = 1.127

log10(alpha) = 5.00, chi2 = 1.098e+04, S = -7.399e-01, nfev = 39, norm = 1.057

log10(alpha) = 4.00, chi2 = 1.048e+03, S = -8.667e-01, nfev = 34, norm = 1.020

log10(alpha) = 3.00, chi2 = 5.258e+01, S = -9.805e-01, nfev = 22, norm = 1.006

log10(alpha) = 2.00, chi2 = 1.426e+01, S = -1.025e+00, nfev = 36, norm = 1.001

log10(alpha) = 1.00, chi2 = 1.036e+01, S = -1.076e+00, nfev = 25, norm = 1.000

log10(alpha) = 0.00, chi2 = 9.911e+00, S = -1.144e+00, nfev = 75, norm = 0.999

log10(alpha) = -1.00, chi2 = 9.686e+00, S = -1.546e+00, nfev = 33, norm = 0.999

log10(alpha) = -2.00, chi2 = 9.612e+00, S = -2.573e+00, nfev = 74, norm = 0.999

log10(alpha) = -3.00, chi2 = 9.602e+00, S = -3.731e+00, nfev = 77, norm = 0.999

Optimal log alpha 2.1715267252423462

log10(alpha) = 2.17, chi2 = 1.598e+01, S = -1.018e+00, nfev = 16, norm = 1.002

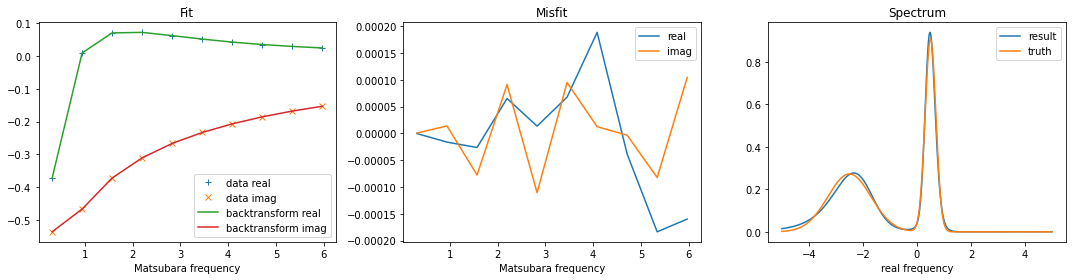

3.1.4. Plot the result¶

Now we can plot the result of the continuation. It is highly recommended to look at two kinds of plots. First, one should look at the backtransform and compare it to the original data. (Plotting one over another, or the difference.) The backtransform should be a good fit, but of course you should not try to fit noise. Second, one should check, if the result looks reasonably. In the case of noise-fitting, peaks become sharp and hight, and even additional peaks can appear. Another typical symptom is that spectral weight is shifted to the borders of the real-frequency interval.

[6]:

fig, ax = plt.subplots(ncols=3, nrows=1, figsize=(15,4))

ax[0].plot(wn, gf_mats.real, linestyle='None', marker='+', label='data real')

ax[0].plot(wn, gf_mats.imag, linestyle='None', marker='x', label='data imag')

ax[0].plot(wn, sol.backtransform.real, label='backtransform real')

ax[0].plot(wn, sol.backtransform.imag, label='backtransform imag')

ax[0].legend()

ax[0].set_xlabel('Matsubara frequency')

ax[0].set_title('Fit')

ax[1].plot(wn, gf_mats.real-sol.backtransform.real, label='real')

ax[1].plot(wn, gf_mats.imag-sol.backtransform.imag, label='imag')

ax[1].legend()

ax[1].set_xlabel('Matsubara frequency')

ax[1].set_title('Misfit')

ax[2].plot(w, sol.A_opt, label='result')

ax[2].plot(w_real, spec_real, label='truth')

ax[2].legend()

ax[2].set_xlabel('real frequency')

ax[2].set_title('Spectrum')

plt.tight_layout()

plt.show()

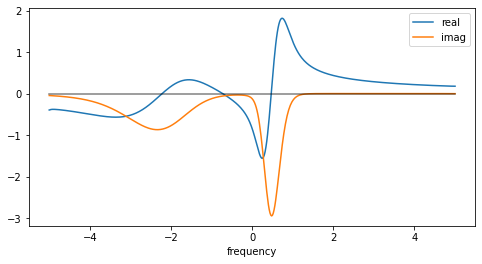

3.2. Constructing a full complex-valued Green’s function from a spectrum¶

Especially when one continues a self-energy, one usually wants not only its spectrum, but rather the full Green’s function. The spectrum is related to the imaginary part of the retarded Green’s function:

The Kramers-Kronig relations provide a way to get the real part from the imaginary part:

This functionality is implemented in the class GreensFunction, which has a method kkt(). The following line of code produces a warning, which can be safely ignored. (In the integration kernel, a division \(0/0\) occurs. The respective value is set by hand, however.)

[7]:

gf_full = cont.GreensFunction(spectrum=sol.A_opt, wgrid=w, kind='fermionic').kkt()

[8]:

fig = plt.figure(figsize=(8,4))

plt.plot(w, gf_full.real, label='real')

plt.plot(w, gf_full.imag, label='imag')

plt.plot(w, np.zeros_like(w), color='black', alpha=0.5)

plt.xlabel('frequency')

plt.legend()

plt.show()

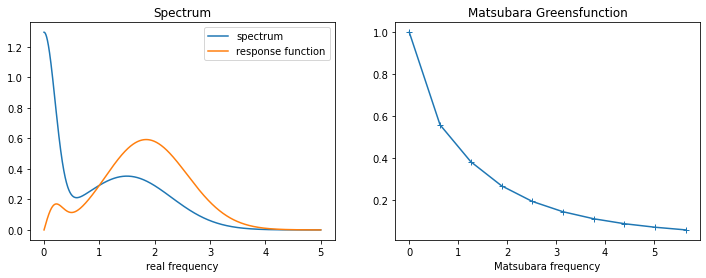

3.3. Continuation of a bosonic Green’s function¶

The continuation of a bosonic Green’s function (response function) works very similar to the fermionic case. It is arguably even simpler. There are howvever a few points to consider: * Trivial but a common source of mistakes: you have to use bosonic Matsubara frequencies. * The implemented kernel assumes that bosonic Green’s functions are purely real on the imaginary axis. * Only positive real frequencies must be used, as the symmetry of bosonic spectra is encoded in the kernel. * At \(\omega = i\omega_0 = 0\), the kernel diverges. This is avoided by defining the spectral function as done below. We nevertheless get a 0/0 warning, but the respective value is set manually, so don’t worry.

The problem of the last point is mitigated by defining the spectrum as \(S(\omega) = \frac{2}{\pi} \frac{Im G(\omega)}{\omega}\), where \(G\) is the actual response function. This leads to the relation

Apart from the differences just mentioned, the continuation workflow is the same as in the fermionic case. Therefore, it is recommended to read the above section about fermionic continuation first. In the following only the comments only address differences in the bosonic case.

3.3.1. Generate test data¶

[9]:

w_real = np.linspace(0., 5., num=2001, endpoint=True)

spec_real = np.exp(-(w_real)**2 / (2.*0.2**2))

spec_real += 0.3 * np.exp(-(w_real-1.5)**2 / (2.*0.8**2))

spec_real += 0.3 * np.exp(-(w_real+1.5)**2 / (2.*0.8**2))# must be symmetric around 0!

spec_real /= np.trapz(spec_real, w_real) # normalization

beta = 10.

wn = 2. * np.pi/beta * np.arange(10)

noise_amplitude = 1e-4 # create gaussian noise

rng = np.random.RandomState(1234)

noise = rng.normal(0., noise_amplitude, wn.shape[0])

with np.errstate(invalid="ignore"):

kernel = (w_real**2)[None,:]/((wn**2)[:,None] + (w_real**2)[None,:])

kernel[0,0] = 1.

gf_bos = np.trapz(kernel*spec_real[None,:], w_real, axis=1) + noise

norm = gf_bos[0]

gf_bos /= norm

fig,ax = plt.subplots(ncols=2, nrows=1, figsize=(12,4))

ax[0].plot(w_real, spec_real, label='spectrum')

ax[0].plot(w_real, w_real*spec_real, label='response function')

ax[0].legend()

ax[0].set_xlabel('real frequency')

ax[0].set_title('Spectrum')

ax[1].plot(wn, gf_bos.real, marker='+')

ax[1].set_xlabel('Matsubara frequency')

ax[1].set_title('Matsubara Greensfunction')

plt.show()

3.3.2. Set the problem¶

Use only positive frequencies

Use bosonic Matsubara frequencies

Use

kernel_mode='freq_bosonic'

[10]:

w = np.linspace(0., 5., num=501, endpoint=True)

probl = cont.AnalyticContinuationProblem(im_axis=wn, re_axis=w,

im_data=gf_bos, kernel_mode='freq_bosonic')

3.3.3. Solve the problem¶

[11]:

err = np.ones_like(wn) * noise_amplitude / norm

model = np.ones_like(w)

model /= np.trapz(model, w)

sol,_ = probl.solve(method='maxent_svd', alpha_determination='chi2kink', optimizer='newton', stdev=err, model=model)

501 data points on real axis

10 data points on imaginary axis

9 significant singular values

Precomputation of coefficient matrices...

log10(alpha) = 9.00, chi2 = 3.113e+07, S = -2.813e-03, nfev = 15, norm = 0.935

log10(alpha) = 8.00, chi2 = 1.284e+07, S = -3.527e-02, nfev = 19, norm = 0.823

log10(alpha) = 7.00, chi2 = 2.004e+06, S = -2.242e-01, nfev = 20, norm = 0.907

log10(alpha) = 6.00, chi2 = 7.631e+04, S = -4.289e-01, nfev = 31, norm = 0.987

log10(alpha) = 5.00, chi2 = 8.535e+03, S = -5.194e-01, nfev = 21, norm = 0.998

log10(alpha) = 4.00, chi2 = 2.695e+02, S = -6.141e-01, nfev = 21, norm = 1.000

log10(alpha) = 3.00, chi2 = 1.324e+01, S = -6.385e-01, nfev = 19, norm = 1.000

log10(alpha) = 2.00, chi2 = 7.493e+00, S = -6.473e-01, nfev = 34, norm = 1.000

log10(alpha) = 1.00, chi2 = 4.765e+00, S = -6.916e-01, nfev = 25, norm = 1.000

log10(alpha) = 0.00, chi2 = 4.163e+00, S = -7.789e-01, nfev = 27, norm = 1.000

log10(alpha) = -1.00, chi2 = 3.828e+00, S = -1.391e+00, nfev = 30, norm = 1.000

log10(alpha) = -2.00, chi2 = 3.742e+00, S = -2.537e+00, nfev = 33, norm = 1.000

log10(alpha) = -3.00, chi2 = 3.733e+00, S = -3.708e+00, nfev = 36, norm = 1.000

Optimal log alpha 2.331280045009786

log10(alpha) = 2.33, chi2 = 8.742e+00, S = -6.429e-01, nfev = 17, norm = 1.000

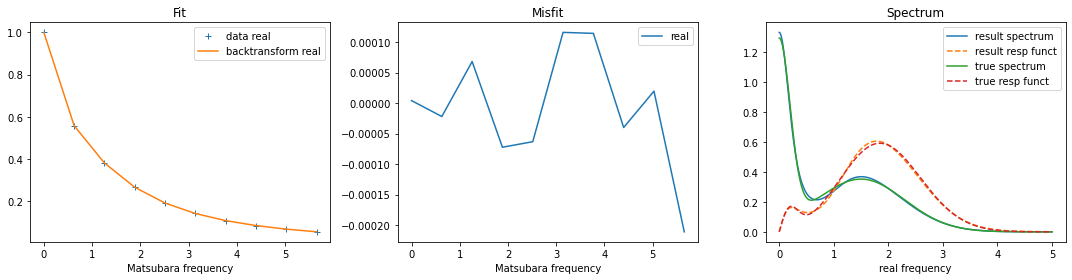

3.3.4. Plot the results¶

Remember that the code calculates the spectrum. To get the response function on the real axis, multiply the spectrum by \(\omega\)!

[12]:

fig, ax = plt.subplots(ncols=3, nrows=1, figsize=(15,4))

ax[0].plot(wn, gf_bos.real, linestyle='None', marker='+', label='data real')

ax[0].plot(wn, sol.backtransform.real, label='backtransform real')

ax[0].legend()

ax[0].set_xlabel('Matsubara frequency')

ax[0].set_title('Fit')

ax[1].plot(wn, gf_bos.real-sol.backtransform.real, label='real')

ax[1].legend()

ax[1].set_xlabel('Matsubara frequency')

ax[1].set_title('Misfit')

ax[2].plot(w, sol.A_opt, label='result spectrum')

ax[2].plot(w, w*sol.A_opt, label='result resp funct', linestyle='--')

ax[2].plot(w_real, spec_real, label='true spectrum')

ax[2].plot(w_real, w_real*spec_real, label='true resp funct', linestyle='--')

ax[2].legend()

ax[2].set_xlabel('real frequency')

ax[2].set_title('Spectrum')

plt.tight_layout()

plt.show()

[ ]: